Azure DevOps Implementation Report for a Typical .Net Application

What follows is a report I wrote for an assignment I undertook as part of my MSc. in DevOps which I am currently doing with Technological University Dublin and was submitted in December 2023, were it received a B+ grade. The assignment involved refactoring a simple .NET web app application and developing a CI/CD pipeline for it.

Link To Azure DevOps Project - https://dev.azure.com/coffeyrichard/Blood%20Pressure%20Calculator

Refactoring of App

The main refactoring required was the business logic for the application contained in the BloodPressure.cs class. An "Ideal" BP category was introduced, replacing "Normal". It offers enhanced BPCategory handling via extension methods for display name functionality. Instead of Data Annotations, it uses custom property setters for range validation in BloodPressure, throwing exceptions for invalid values. The Category property logic is fully fleshed-out, categorising blood pressure values accurately.

Another improvement to the code happened in the Startup.cs class. Even though the project was targeted to a .NET6 framework, the startup class was evidently taken from a previous .NET version before 6. This was evident by the use of IHostingEnvironment (which has been deprecated) and not IWebHostEnvironment (.NET6+). I thusly re-engineered the startup class accordingly.

Also for all the tests that will be performed throughout the pipeline a new project with the Blood Pressure solution was added called BPCalcualtor.Tests. This is so that we do not have the test files with their respective binaries and packages cluttering up the application’s main project folder.

Certain new packages had to be added to the main project’s .csproj file. These packages included MSTest (for the unit tests) and Application Insights. On the topic of Application Insights the startup class also needed to be injected with the Connection String settings for the App Insights to gather information from the application’s code.

Finally I did some refactoring of the HTML and CSS elements of the application. Basically just “prettying” it up a bit.

Azure Infrastructure

Azure Resource Group

Azure DevOps can deploy to many different hosting environments and cloud providers but it does naturally offer very good integration with the Azure Cloud with both of them being Microsoft products. Couple that with the app being .NET (which is also a Microsoft technology), I decided to go with an all Microsoft solution for my deployment and hosting.

To deploy to Azure, an app service was chosen as this naturally fits the scenario of deploying a .NET web based application with a minimal amount of overhead (as it is PaaS infrastructure). As will be seen later when discussing the pipeline, I choose to make three distinct app services - one for Development (DEV), another for User Acceptance Testing (UAT) and the final one for Production (PROD). The PROD app service also has a staging deployment slot tied to it which will be discussed further in the pipeline section. I used typical naming conventions for Azure resources also, that being app-bpcalc-[env], while also organising all my resources for the project into a resource group called rg-bpcalc.

All three apps services are on the same app service plan of Standard. This is the minimum tier of pricing necessary in order to avail of app deployment slots. I also feel it is important to have the testing and production environments as similar as possible to each other, which necessitates them being on the same app service plan (and thus have access to the same compute resources).

The three app services also all came with a separate linked resource for collecting real time telemetry from each app. This resource being App Insights. To verify that the apps and the App Insights for each app were correctly connected , I had to ensure that the App Insights Instrumentation Key was copied over to each app’s configuration settings. This was all done through the Azure Portal interface. Along with the refactoring of the App Insights code into my startup class in the previous section, it was then easy to see the statistics and metrics flow in for each app as they were used in the App Insights reports.

Pipeline Implementation

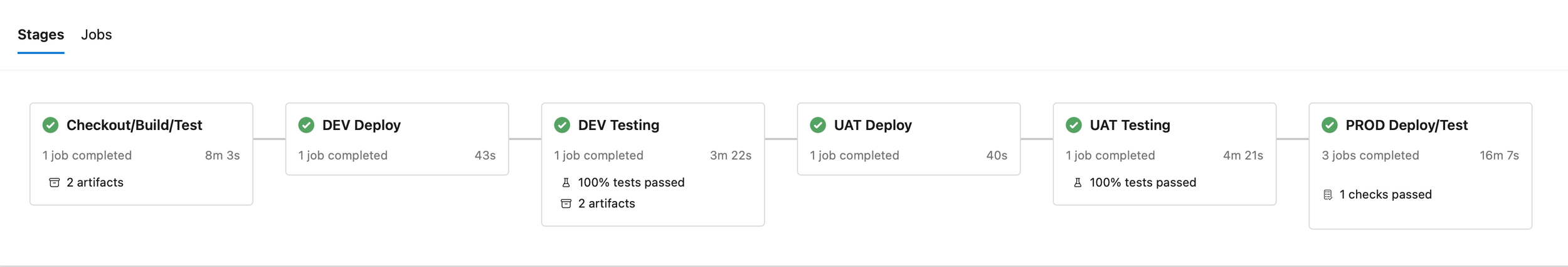

The 6 stages of the pipeline

It was decided at the onset to simulate a real life deployment scenario as much as possible which is why I went with a typical Build to DEV to UAT to PROD deployment scenario. At each stage along this journey various tests will be conducted before being allowed to pass along to the next stage. Each stage must be completed successfully before moving on to the next stage

The pipeline was all specified using YAML (azure-pipelines.yml) within the project. I did not use Azure DevOps Classic Editor to build the pipeline as that method is deprecated now and you lose the ability to have “pipelines as code” that can be effectively version controlled in Git.

The pipeline is run on every push to the main branch of the project and uses the latest Windows VM image to run the build and deploy agents. Also for many of the proceeding tests about to be mentioned, I needed to first download an extension to my Azure DevOps organisation in order to use them.

Checkout/Build/Test

In the Build stage, the code repository is initially checked out from the git repo’s main branch. The pipeline then prepares for a SonarCloud analysis, a cloud-based version of the static code analysis tool, SonarCube. The SonarCloud setup additionally involved registering an account online with SonarCloud and getting a Personal AccessToken (PAT) from my Azure DevOps project and pasting that into the configuration settings in my SonarCloud account to integrate the two.

The subsequent steps restore the NuGet packages essential for the build, followed by a WhiteSource scan to identify and address vulnerabilities in the project’s packages. The GitLeaks scan is then performed to detect potential exposure of secrets/passwords or sensitive information within the git history. These particular steps cover some basic security checks early in the pipeline just before the build commences.

The solution is built next, and post-build, a SonarCloud analysis is conducted. The results of this analysis are published to SonarCloud for review. The final action in this stage is the publishing of build artifacts, which are crucial for the deployment stages that follow. These artifacts are also viewable within Azure DevOps itself by going to the various tabs above each individual pipeline’s run.

DEV Deploy

The DevDeploy stage focuses on preparing the DEV environment for the application. To achieve this, the build artifacts generated in the Build stage are downloaded. Once retrieved, these artifacts are deployed to a DEV environment within Azure (app-bpcalc-dev). This ensures that the latest build of the application is available for preliminary testing in a development context.

DEV Testing

Unit Tests Coverage

The DevTest stage delves into the critical task of verifying the application's functionality in the DEV environment. Unit tests are run on BloodPressure.cs using MSTest to ascertain that individual units of the application function as intended. After the execution of these tests, the code coverage results are published to give insight into the portions of code tested. These results can be viewed with Azure DevOps itself.

Another significant activity in this stage is the generation of SpecFlow living documentation. SpecFlow provides a comprehensive representation of application behaviour (i.e BDD Testing), offering stakeholders a clearer understanding of application capabilities. These tests are written first in plain English inside a Gherkin file before being tested. Once again there is a tab within the individual pipeline run where one can view this report without leaving Azure DevOps.

I made a conscious decision to move the unit tests to between the DEV to UAT stages, because firstly I wanted a slightly quicker build for the developers to work with and also Specflow requires the unit tests artifact to work and it was easier to keep both of those tasks within the same stage. BDD testing should logically in my opinion occur right before deploying to UAT, as it helps ensure alignment with expectations, provides early feedback and promotes continuous integration.

UAT Deploy

Transitioning to the UAT phase, the UATDeploy stage downloads the build artifacts once again. These are then deployed to a UAT environment in Azure (rg-bpcalc-uat). This deployment facilitates a setting where end-users can validate the application's behaviour before it's released into production.

UAT Testing

Playwright (E2E) Tests

Ensuring the application is ready for production, the UATTest stage is vital. It begins by setting up the Node.js installation that is required for Playwright, an end to end (E2E) test suite for .NET applications. Playwright is then utilised to execute end-to-end tests, verifying the application's behaviour across different scenarios and workflows, such as when Systolic and Diastolic figures are out of range etc…

Post-testing, the artifact results are published under the same tab in Azure DevOps as the Unit Test results to ensure stakeholders are informed about the application's readiness. As before if any of these tests fail the pipeline will not proceed to the next and final stage.

As in previous stages, I made the design decision to have E2E tests here in this stage right before the production deployment as they guarantee holistic functionality, catch environment-specific issues, and prepare the application for real-world usage.

PROD Deploy/Test

Blazemeter Performance Test Settings

The final stage targets the production environment, specifically, a staging slot within it. The application is deployed to this staging slot (app-bpcalc-prod/staging), allowing for last-minute checks and validations. Before this occurs a manual trigger needs to be approved by a member of the project’s team. This extra security precaution before doing production deployments was achieved again using YAML.

So once the manual trigger has been approved and the app staging slot has been deployed to, performance tests are then conducted using BlazeMeter, a cloud-based performance testing service based on JMeter, to ensure the application can handle anticipated loads. Much like SonarCloud, earlier I had to sign up to use a free version of Blazemeter. I then wrote the jmx (JMeter test) file (in the repo) and then uploaded that to my Blazemeter account. This file stipulates a basic performance load scenario, were we send 20 virtual users to the staging slot app who enter certain values and click the Submit button. The ramp up period is 1 minute and the test then runs for 3 minutes before cooling down. If in that time any non 200 responses are received or the response time of any requests is more than 10 seconds then test will fail and the final swap task will not occur.

Concluding the stage after a successful Blazemeter test, Azure App Service slots are swapped, which effectively promotes the application from the staging version to the actual production version (app-bpcalc-prod). Using deployment slots also gives us quick and robust rollback options in case something goes wrong with the performance tests at the last minute.

New Feature to be Added

App with new feature implemented. See the red text in the header element.

For the new feature to be added I choose a simple line of text to be inserted at the top of the header element of the application’s frontend. This piece of text would have a variable in it to be determined at runtime to indicate to the end user if they are viewing the app in its DEV, UAT or PROD app service environments. The code makes use of the env variable built into IWebHostEnvironment class found in .NET6. This env is then injected into the Razor page before being used in an if conditional in the Layout html such as env.IsDevelopment(). While not done in the code, additionally an extra configuration setting for each app service would need to be added (ASPNETCORE_ENVIRONMENT = Development, UAT or Production) in the Azure Portal.

I made a user story for this simple task in the Azure Boards section of the Azure DevOps suite before starting the coding and configuration changes necessary for it. I cloned the main branch for the project down to my VS Code and from there I made a new feature branch of it and named it - Feature/User-Story/[id-of-user-story]. After finishing coding, I proceeded to publish the feature branch to Azure DevOps and make a Pull request (PR) of it for merging into the main branch.

Git Branching Strategy & Policies

Branch Policies in action

The procedure I outlined in the previous section regarding making a feature branch off of the main branch and then merging back into it with a PR, would be a Git Feature Branch strategy. This is a common git merging strategy and there are a number of ways in Azure DevOps to setup policies to facilitate smoother implementation of it which is what I did for this project. The polices I enabled within the repo for my project included:

Nothing can be pushed directly to the main branch. It must go through a PR process first, which in turn comes from making a branch off of main in the first place. We always want to go through this process as any merges to the main branch trigger the pipeline.

All PR’s must be approved by a least one person on the project. In a real world scenario this would obviously be someone else other than me but for the purposes of this demo it is just me as the single approver.

All PR’s must have a work item in Azure Boards linked to them. This is to ensure that all work is being tracked as part of a Scrum/Agile sprint.

After PR’s have been completed and merged, auto-delete the feature branch. This is just a clean up measure so we don’t have a lot of completed branches building up over time.

Future Improvements

If I was going to make further improvements or at least take some learnings after completing this CI/CD project pipeline it would be these points:

I would probably investigate using a Linux based VM image to do the builds with. This would probably have given me better options with using Docker images in my pipeline. While using Docker on Windows machines isn’t impossible it is definitely harder.

I would investigate further with splitting my pipeline into smaller YAML template files for each stage instead of one big unwieldy YAML file.This would allow for reuse of certain elements across different projects. For instance the build stage could probably be copied to any other projects I would want to use for personal projects down the road.

I would investigate further with introducing a pre-merge pipeline step that would run some checks on PR’s before they get approved. These checks would need to be passed along with any human approvals before being allowed to be merged into main.